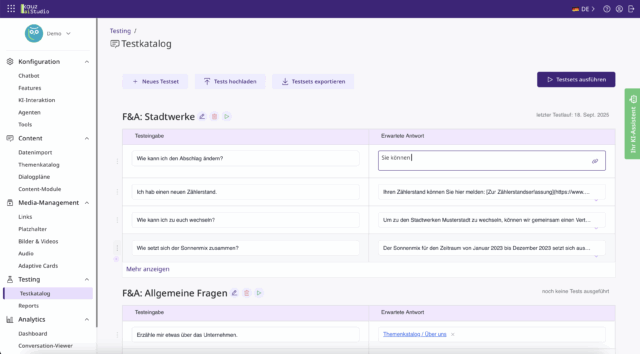

We asked ourselves how AI implementations can be made even more efficient with the help of AI itself – and developed a suitable solution with the new “test catalog” feature. From the upcoming fall release, the test catalog will be fully available to all customers and marks an important step towards an automated, scalable and secure AI introduction.

In this article you will find out:

- The key stages that successful AI projects go through

- How the test catalog reduces the effort in the test and validation phase

reduces

- How to keep your data and models up to date and compliant after implementation

Three milestones of successful AI implementations

Successful AI projects proceed in three clearly defined stages – from legal approval to quality assurance of the AI answers.

1. ensure AI compliance

In the first phase, the focus is on clarifying organizational and data protection approvals. The earlier these framework conditions are defined, the faster the technical implementation can begin. We support our customers with ready-made document templates and best practice templates to speed up the process considerably and at the same time ensure compliance with the requirements of the EU AI Act and GDPR.

2. compile the appropriate data set

The implementation phase begins with the compilation and evaluation of suitable data. We contribute our experience from numerous customer projects and provide support with methods for data selection, enrichment and evaluation.

The actual data evaluation takes place on the customer side – but whether the data quality is sufficient is usually only revealed in the test phase of the AI application. This is precisely where the new test catalog comes in.

3. ensure the response quality of the AI

Previously, test questions had to be created manually and incorrect answers had to be recorded manually to ensure a high response quality. Although this process was effective and was often perceived as exciting by customers, it meant a great deal of manual effort.

The new test framework fundamentally changes this:

- Test questions are generated automatically

- Results are systematically evaluated

- The system provides targeted recommendations for measures to further improve quality

This eliminates a large part of the project effort. Companies can use their AI productively more quickly, continuously optimize it and expand the AI environment securely and efficiently.

Success in record time: AI launches in one month instead of three

With the new test catalog, AI projects are significantly accelerated – from an average of three months to just one month. The automated validation process significantly reduces the manual testing effort in AI controlling and creates space for the essentials: the further development of your AI strategy.

Be part of the fall release now!

Register now for our fall release on November 19 from 9:30 a.m. and experience a live demo of the test catalog – and how it drastically reduces the effort involved in quality assurance and AI controlling.